UC7 - Human-Robot Collaboration in a Disassembly Process with Workers with Disabilities

- MGEP Mondragon Unibertsitatea Goi Eskola Politeknikoa Mondragon Goi Eskola Politeknikoa S.Coop. (MGEP) is the Faculty of Engineering of MONDRAGON UNIVERSITY. MGEP has a socially orientated initiative and vocation, and was declared a non-profit University of common public interest.

- Universidad de Castilla-La Mancha Spanish university created in 1985. It is the flagship higher education institution of the Castilla-La Mancha region. UCLM has four campuses: Albacete, Ciudad Real, Cuenca, and Toledo. It also has university sites in Talavera de la Reina and Almaden.

- PUMACY TECHNOLOGIES AG PUMACY TECHNOLOGIES AG is a leading provider of knowledge and data analytics solutions. Smart service solutions cover the technical, methodical and organisational aspects of data analytics and related context-sensitive solutions. It comprises the employment of cyber-physical systems, industrial IoT components and sensor-based wearable solutions in industrial environments.

- Eskisehir Osmangazi University Development of Verification and Validation methods for Safety & Security of industrial robots. The developed methods will also be validated in a TRL-5 laboratory environment in Eskisehir Osmangazi University Intelligent Factory and Robotics Laboratory (ESOGU- IFARLAB, ifarlab.ogu.edu.tr ).

This Human-Robot Collaboration in a Disassembly Process with Workers with Disabilities use case aims to develop and validate a collaborative robotic disassembly solution for End-Of-Life products which is adapted to work with people with disabilities. This solution will provide people with reduced working capabilities means to improve their working conditions and gain productivity, giving them an improved ability to find a meaningful work and a place on the labour market. This solution will provide technologies capable of enabling collaboration through intelligent human-robot interaction (e.g. natural language interfaces such as voice and perhaps gestures) and learning from monitoring the disassembly process. Additionally, augmented reality technologies will be used to validate the robotic solution in different virtual scenarios before implementing it in a real environment.

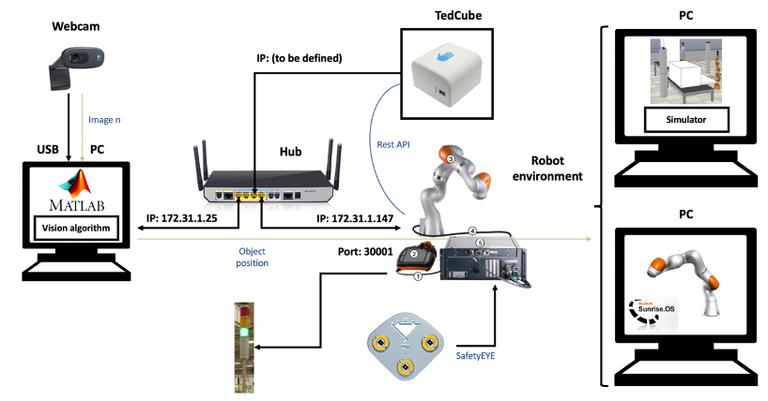

The robotic solution will be based on KUKA LBR Iiwa serial manipulator. In terms of safety, this robot complies with an action level D in Category 3, meeting the requirements of the EN ISO 13849 standard on machine safety. The LBR Iiwa has seven degrees of freedom, which provides greater versality in movement by allowing access to different positions with more than one axis configuration. In addition, it has force sensors along each axis, which guarantees direct and safe interaction between the robot and the operator.The figure shows the generic HW architecture for the proposed robotic solution. The interaction between the operator and the robot will be carried out through the TedCube voice management device. At the same time, a camera will be incorporated to monitor the robot’s movement and a computer to program its motion. All these devices will be connected through an ethernet network (own IP) and will be linked among them through a router.

In regard to safety and security, collaborative robotics provides a collaborative working environment between the operator and the robot, where the worker provides flexibility and problem-solving ability, while the robot provides strength and accuracy. However, collaborative robotics is not an overworked field, mainly because, due to the absence of fences, this robotics increases both the risk and the sense of risk perceived, a feeling that, based on the experience and knowledge of companies in whose staff there are workers with some kind of disability, ought to be even more noticeable.

Currently, the most widely used safety standards in the field of collaborative robotics are ISO/TS 15066:2016, ISO 10218-1 and ISO 10218-2:2011. This last standard sets out four strategies for reducing risk in collaborative robotics; 1) safety-rated monitored stop, 2) hand guiding, 3) speed and separation monitoring, and 4) power and force limiting by inherent design or control. In this case, use case’s safety will be based on ISO/TS 15066:2016 and the third strategy proposed by ISO 10218-2:2011. This strategy, through the monitoring of the distance between the worker and the robotic cell, allows the speed of the robot to be regulated depending on the safety zone in which the operator is located. The use of safetyEYE® technology is being considered for this purpose. For the verification of the system's solution, simulations will be performed in the Gazebo software to identify possible improvements in early stages. Later, and as aforementioned, augmented reality will be used to validate the solution with the operators in different proposed scenarios (human in the loop). Finally, it is expected to perform a validation in a relevant environment.