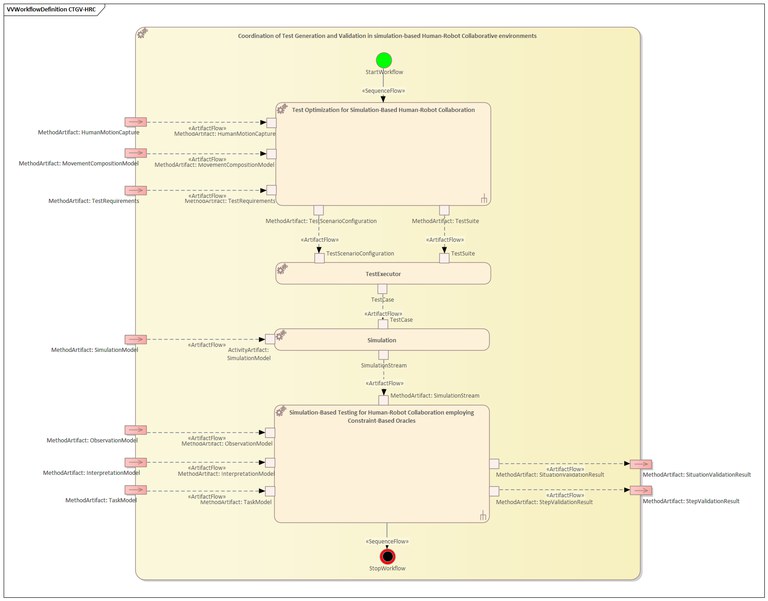

Coordination of Test Generation and Validation in simulation based Human Robot Collaborative environments

When we refer to safety-critical systems such as Human-robot collaborative environments, simulation-based testing ensures a safe environment for validation activities [CTGV-HRC1]. However, the complexity of simulating robots and humans in a simulated universe can be computationally expensive. Therefore, it is essential to apply automation and optimisation techniques to make the right use of resources. An effective test optimisation technique is based on the generation of test suites. This technique, which usually focuses on coverage criteria, has recently also proven to be successful when applying new criteria such as performance, test execution time or test case diversity [CTGV-HRC2]. Other key factor in the efficient validation of complex systems involves the automated evaluation of test cases with oracles [CTGV-HRC3]. Testing with oracles applied to complex simulation-based systems usually produces the verdict after the simulation is completed [CTGV-HRC4]. However, obtaining a real-time evaluation to determine the course of the simulation based on the oracle is crucial, if the simulation tests are to be reused in later phases of testing in a laboratory environment with real human-robot collaboration [CTGV-HRC5].

This combined method integrates the Test Optimization for Simulation-Based Human-Robot Collaboration (TOS-HCR) and Simulation-Based Testing for Human-Robot Collaboration employing Constraint-Based Oracles (SBT-CBO) improved methods in order to coordinate the simulation-based testing activity in Human-Robot Collaboration environments. On the one hand, generating test cases for Human-Robot collaboration environments provides the necessary tests to ensure the quality of those safety-critical systems. On the other hand, providing real-time and automated verdict of test execution of simulation environments through oracles provides essential feedback to evaluate safety-critical systems. However, coordination of both methods is necessary to ensure that the generated test cases are executed and that during execution in the simulation environment, it is feasible to observe the simulation to evaluate in real-time the result of the test with the oracle.

The coordination of improved methods is described in the process section including the graphical representation and its description.

The workflow of the combined method is shown in the figure below. The combined method consists of the two improved methods TOS-HRC and SBT-CBO and additional activities for the coordination. This is how the coordination activities are able to manage the launch of the test execution in the simulation environment and provide the simulation data as it is produced to the oracle.

The process consists of x steps:

Step 1: Test Case Generation (TOS-HRC improved method)

- Input: Human Motion Capture, Movement Composition Model, Test Requirements

- Processing: Generate a test suite (of test cases with compound movements of works) and related test scenario configuration (with execution environment conditions), based on basic motion captures and test requirements. Further details described in the improved method TOS-HRC.

- Output: Test Suite, Test Scenario Configuration.

Step 2: Convert and launch Test Case

- Input: Test Suite, Test Scenario Configuration.

- Processing: A test case of the provided Test Suite is selected, and compound movements are transformed from motion data format to ROS format.

- Output: Test Case.

Step 3: Execute Test Suite into Simulation Scenario

- Input: Test Case, Simulation Model

- Processing: The execution of the provided Test Case is launched into the Simulation Model, using the bvh-broadcaster library and SimulationStreams generated.

- Output: SimulationStream

Step 4: Real-time validation

- Input: SimulationStream, Observation Model, Interpretation Model, Task Model.

- Processing: SimulationStreams evaluation is performed in the oracle and step and situation results are provided as a verdict. Further details described in the improved method SBT-CBO.

- Output: SituationValidationResult, StepValidationResult

Method artefacts:

- Inputs:

- Human Motion Capture,

- Movement Composition Model,

- Test Requirements

- Simulation Model

- Observation Model,

- Interpretation Model,

- Task Model

- Intermediate results

- Test Suite

- Test Scenario Configuration

- Test Case

- SimulationStream

- Outputs:

- SituationValidationResult

- StepValidationResult

- This method allows to automate the validation process of simulation-based tests for Human-Robot collaborative environments. The automation occurs through the coordination between the generation of the tests and their evaluation in the oracle.

- This combined approach allows reusing the infrastructure to run tests and obtain the validation result in a distributed way.

- The testing infrastructure could also be used for non-simulation based testing scenarios (e.g. in laboratories with real robots).

- Together with the coordination sub-system the OLIMPUS platform could be used in production environments.

- Requires simulators and robots to be ROS compatible.

- To generate the set of base movements an initial recording is required, which depends on the domain and use case. Although movement databases exist, it may be the case that in the required domain and use case the base movements do not exist.

- The generation of Constraint-based knowledge model is a complex and time-consuming task.

- Time-related constraints must be satisfied and cannot be accelerated for time-saving purposes.

Improvements over state before VALU3S project:

The proposed combined method improved the effectivity of the verification and validation by automating the efforts of test case generation and validation with oracles.

Addressed gaps:

- Functionality:

- [GAPM_TOS03] Generating test cases in human-robot collaboration scenarios can be a non-automated and time-costly activity. To address this gap a test case generator will developed for the selected simulation platform.

- [GAPM_SBT01] In some cases, human intervention is necessary to determine the verdict of the execution of the simulation. This work requires an effort by the validation engineer. To address this gap an oracle for diagnosis of the Human-Robot interaction will be included.

- [GAPM_CTGV-HRC01] An execution coordination and management between two improved methods (i.e., SBT-CBO and TOS-HRC) will be included to manage multiple tests and test suits.

- [CTGV-HRC1] A. Arrieta, S. Wang, U. Markiegi, G. Sagardui, L. Etxeberria. “Employing Multi-Objective Search to Enhance Reactive Test Case Generation and Prioritization for Testing Industrial Cyber-Physical Systems” in IEEE Transactions on Industrial Informatics, vol. 14, no. 3, pp. 1055-1066, March 2018, DOI:10.1109/TII.2017.2788019.

- [CTGV-HRC2] G. Grano, C. Laaber, A. Panichella and S. Panichella, "Testing with Fewer Resources: An Adaptive Approach to Performance-Aware Test Case Generation" in IEEE Transactions on Software Engineering, vol. 47, no. 11, pp. 2332-2347, 2021. doi: 10.1109/TSE.2019.2946773.

- [CTGV-HRC3] A. Arrieta, J. Agirre and G. Sagardui, "A Tool for the Automatic Generation of Test Cases and Oracles for Simulation Models Based on Functional Requirements," in 2020 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Porto, Portugal, 2020 pp. 1-5. doi: 10.1109/ICSTW50294.2020.00018

- [CTGV-HRC4] L. V. Sartori, "Simulation-Based Testing to Improve Safety of Autonomous Robots," 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), 2019, pp. 104-107, doi: 10.1109/ISSREW.2019.00053.

- [CTGV-HRC5] Aguirre, A., Lozano-Rodero, A., Matey, L. M., Villamañe, M., & Ferrero, B. (2014). A novel approach to diagnosing motor skills. IEEE Transactions on Learning Technologies, 7(4), 304-318. DOI: 10.1109/TLT.2014.2340878