Virtual & augmented reality-based user interaction V&V and technology acceptance

Virtual Reality (VR) and Augmented Reality (AR) are powerful tools to allow verification and validation of systems that are either not completely built yet or in which unexpected behaviours can harm people or equipment. This is mainly because end-users can interact with a virtual representation of the system to validate that cannot harm them if there is a failure. Technology is not only expected to be safe, but also the humans interacting with it have to accept the technology, which must be tailored to their particular needs, and have to have trust in it and in its functioning [VUR1]. In this context, trust can be defined as an attitudinal judgement of the degree to which a user can rely on an agent (the collaborative robot) to achieve his/her goals under conditions of uncertainty [VUR2].

This method, originally described in [VUR3, VUR4], is applied in the monitoring of dependant people in a home environment. An unmanned autonomous vehicle (e.g., a drone) monitors the physical and emotional state of dependent people in their own homes. Safety and efficiency of human-robot collaboration often depend on appropriately calibrating trust towards the robot and using a user-centred approach to realise what impacts the development of trust [VUR5]. The concept of trust is very important in the adoption of technologies to assist people. On the other hand, the manner people accept collaborative robots in their life is still unknown, and it is an essential aspect to overcome the resistance towards them [VUR6]. The evaluation of whether the person feels safe and comfortable with the proposed solution becomes an important aspect to guarantee a safe environment.

In order to evaluate the users’ sense of safety and comfort, surveys and interviews can be conducted so that the participants evaluate different parameters. For example, in [VUR4], the trajectory during the monitoring process of an unmanned aerial vehicle’s (UAV) in a VR environment is evaluated. The study focusses on analysing three key parameters: (i) the relative flight height, (ii) the speed of the UAV during the lap to the person, and finally, (iii) the shape of the trajectory that the UAV follows around him/her.

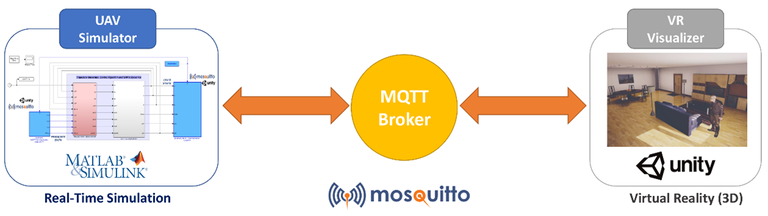

The technological approach followed to achieve the HRI interaction involving end-users is described in [VUR3] and relies on a distributed architecture with two main modules: the UAV Simulator (implemented in Matlab Simulink), in charge of reproducing the flight of the UAV considering its dynamics, and generating the trajectories; and the VR Visualiser (implemented in Unity3D), in charge of rendering the virtual UAV and its behaviour, as well as the virtual environment in which the UAV flight takes place. These two modules communicate with each other using the MQTT protocol.

Figure. System architecture of the VUR method implentation.

This method has been applied to study the acceptance of monitoring robots [VUR3, VUR4], and, therefore, little human-robot interaction was considered in this scenario. However, it can be extended to other areas where there is greater human-robot interaction, such as Industry 4.0.

Early testing before the physical robot solution is deployed

Allows obtaining collaboration experience without using the real robot in a safe environment and thus reducing the risks

The differences in the interaction/collaboration between simulation and reality

The cost of building the VR/AR simulator

[VUR1] van den Brule, R., Dotsch, R., Bijlstra, G. et al. Do Robot Performance and Behavioral Style affect Human Trust?. Int J of Soc Robotics 6, 519–531 (2014). https://doi.org/10.1007/s12369-014-0231-5

[VUR2] Lewis, M., Sycara, K., & Walker, P. The role of trust in human-robot interaction. In Foundations of trusted autonomy (pp. 135-159). Springer, Cham. (2018). doi: 10.1007/978-3-319-64816-3_8.

[VUR3] Belmonte, L.; Garcia, A.S.; Segura, E.; Novais, P.J.; Morales, R.; Fernandez-Caballero, A. Virtual Reality Simulation of a Quadrotor to Monitor Dependent People at Home. IEEE Transactions on Emerging Topics in Computing, 2020. doi:10.1109/TETC.2020.3000352.

[VUR4] Belmonte, L.M.; García, A.S.; Morales, R.; de la Vara, J.L.; López de la Rosa, F.; Fernández-Caballero, A. Feeling of Safety and Comfort towards a Socially Assistive Unmanned Aerial Vehicle That Monitors People in a Virtual Home. Sensors 2021, 21, 908. doi: 10.3390/s21030908.

[VUR5] Okamura K, Yamada S. Adaptive trust calibration for human-AI collaboration. PLOS ONE 15(2): e0229132. (2020). doi: 10.1371/journal.pone.0229132.

[VUR6] Martín Rico, F.; Rodríguez-Lera, F.J.; Ginés Clavero, J.; Guerrero-Higueras, Á.M.; Matellán Olivera, V. An Acceptance Test for Assistive Robots. Sensors (2020), 20, 3912. doi: 10.3390/s20143912.